NVIDIA at GTC 2025: Building the AI Infrastructure of Everything

NVIDIA CEO Jensen Huang's October 28 keynote at the sold-out NVIDIA GTC AI conference and expo at the Walter E. Washington Convention Center in Washington, D.C., highlighted the company’s pioneering efforts in accelerated computing and artificial intelligence (AI), featured a slew of announcements about significant partnerships and AI investments, and laid out his vision for the future of AI and the company.

He also addressed geopolitical tensions over advanced chip sales to China and revealed that NVIDIA’s Blackwell platform is projected to generate $500 billion in revenue through 2026.

The following day, on October 29, NVIDIA became the first company in history to be valued at more than $5 trillion. This was no coincidence, as the stock surge was spurred by Huang’s unveiling of ground-breaking partnerships and product launches, combined with comments from U.S. President Donald Trump the same day that created optimism about the possibility for NVIDIA sales in China.

After meeting with Huang this week, the President touted NVIDIA’s Blackwell high-powered AI processor as “super duper”” and said he planned to discuss it with Chinese leader Xi Jinping during his trip this week for the Asia-Pacific Economic Cooperation Summit. However, after Thursday’s meeting in South Korea, Trump told reporters that while semiconductors were discussed and China intended to “talk to NVIDIA and others about taking chips,” the Blackwell processor itself was not part of the conversation.

Prior to Huang’s remarkable keynote address, the three-day conference also featured a Keynote Pregame discussion event with visionaries from venture, science, infrastructure, security, and manufacturing. It was hosted by Brad Gerstner, Founder and CEO of Altimeter; Patrick Moorhead, Founder, CEO and Chief Analyst of Moor Insights & Strategy; and Kristina Partsinevelos Nasdaq Correspondent with CNBC.

The conference also included:

• An exposition hall with the world’s largest companies in AI, data centers, cloud computing, power generation, hardware, software, robotics, telecommunications, and more.

• Fireside chats and panel discussions with leaders from organizations including the U.S. Department of Energy, Cadence, Synopsis, NVIDIA, OpenAI, Siemens, Microsoft, Plantir, Mayo Clinic, Cisco, JPMorgan Chase, Argonne National Laboratory, Los Alamos National Laboratory, Caterpillar, Eli Lilly and Co., AWS, and more.

• Educational sessions.

• Training labs and certifications.

Huang’s Vision for the Future

Huang has framed the current AI era as the next industrial revolution, and his keynote outlined how he views it and NVIDIA’s role in it. In describing his aspirations, he described wanting NVIDIA to make its technology central to everyday life, including everything from cell phone towers to robotic factories to self-driving cars.

To that end, he announced a $1 billion stake in a partnership with networking firm Nokia to develop the AI‑native wireless stack for 6G; new open-source AI technologies for language, robotics and biology designed to broaden access to AI; partnerships with medical organizations to integrate AI or optimized procedures and patient care; a partnership with Uber to develop AI-based robotaxis; and more.

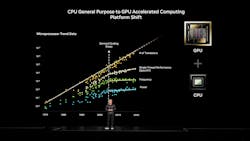

As he outlined his roadmap for U.S. leadership in AI infrastructure and innovation during his speech—ranging from data centers to industrial, commercial, scientific, and government applications—he said one of the company’s biggest accomplishments is inventing a new computing model. The first new computing model in 60 years, it leverage’s NVIDIA’s CUDA platform and uses parallelism, GPUs, and accelerated computing.

“We invented this computing model because we wanted to solve problems that general-purpose computers could not,” Huang said. “We observed that if we could add a processor that takes advantage of more and more transistors, apply parallel computing, add that to a sequential processing CPU, we could extend the capabilities of computing well beyond—and that moment has really come.”

Accelerated computing begins with NVIDIA CUDA‑X libraries across the stack—from cuDNN and TensorRT‑LLM for deep learning, to RAPIDS (cuDF/cuML) for data science, cuOpt for decision optimization, cuLitho for computational lithograph, CUDA‑Q and cuQuantum for quantum and hybrid quantum‑classical computing, and more.

“This really is the treasure of our company,” Huang declared.

Omniverse DSX Blueprint Unveiled

Also at the conference, NVIDIA released a blueprint for how other firms should build massive, gigascale AI data centers, or AI factories, in which Oracle, Microsoft, Google, and other leading tech firms are investing billions. The most powerful and efficient of those, company representatives said, will include NVIDIA chips and software. A new NVIDIA AI Factory Research Center in Virginia will use that technology.

This new “mega” Omniverse DSX Blueprint is a comprehensive, open blueprint for designing and operating gigawatt-scale AI factories. It combines design, simulation, and operations across factory facilities, hardware, and software.

• The blueprint expands to include libraries for building factory-scale digital twins, with Siemens’ Digital Twin software first to support the blueprint and FANUC and Foxconn Fii first to connect their robot models.

• Belden, Caterpillar, Foxconn, Lucid Motors, Toyota, Taiwan Semiconductor Manufacturing Co. (TSMC), and Wistron build Omniverse factory digital twins to accelerate AI-driven manufacturing.

• Agility Robotics, Amazon Robotics, Figure, and Skild AI build a collaborative robot workforce using NVIDIA’s three-computer architecture.

NVIDIA Quantum Gains

And then there’s quantum computing. It can help data centers become more energy-efficient and faster with specific tasks such as optimization and AI model training. Conversely, the unique infrastructure needs of quantum computers, such as power, cooling, and error correction, are driving the development of specialized quantum data centers.

Huang said it’s now possible to make one logical qubit, or quantum bit, that’s coherent, stable, and error corrected.

However, these qubits—the units of information enabling quantum computers to process information in ways ordinary computers can’t—are “incredibly fragile,” creating a need for powerful technology to do quantum error correction and infer the qubit’s state.

To connect quantum and GPU computing, Huang announced the release of NVIDIA NVQLink — a quantum‑GPU interconnect that enables real‑time CUDA‑Q calls from quantum processing unit (QPUs) with latency as low as about four microseconds. It provides quantum researchers with a powerful system to control algorithms needed for large-scale quantum computing and quantum error correction.

NVQLink lets quantum processors connect to world-leading supercomputing labs including Brookhaven National Laboratory, Fermilab, Lawrence Berkeley National Laboratory (Berkeley Lab), Los Alamos National Laboratory, MIT Lincoln Laboratory, Oak Ridge National Laboratory, Pacific Northwest National Laboratory, and Sandia National Laboratories.

“Just about every single DOE lab [is] working with our ecosystem of quantum computer companies and these quantum controllers so that we can integrate quantum computing into the future of science,” he said.

Cascade of Other NVIDIA GTC Announcements Supporting Huang’s Vision

The company released many announcements surrounding its GTC 2025 event in Washington D.C., and Huang discussed some of them in his keynote, about partnerships, investments, and new products that are also part of the company’s strategy for growth:

• NVIDIA will build seven new AI supercomputers for the U.S. Department of Energy—including partnering with Oracle to build the Dept. of Energy’s largest supercomputer for scientific discovery, called the Solstice System, with 100,000 of NVIDIA’s Blackwell chips.

• The chipmaker is working with the Department of Energy’s national labs and top U.S. companies to build the country’s AI infrastructure to support scientific discovery and economic growth and accelerate AI development.

• The chipmaker is collaborating with Palantir Technologies Inc. to build a first-of-its-kind integrated technology stack for operational AI — including analytics capabilities, reference workflows, automation features and customizable, specialized AI agents — to accelerate and optimize complex enterprise and government systems.

• NVIDIA and General Atomics will deliver a high-fidelity, AI-enabled digital twin for fusion energy research, with technical support from San Diego Supercomputer Center at UC San Diego School, the Argonne Leadership Computing Facility (ACLF) at Argonne National Laboratory, and National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory.

• The firm is expanding its partnership with CrowdStrike for enhanced cybersecurity to bring always-on, continuously learning AI agents for cybersecurity to defend critical infrastructure across cloud, data center, and edge environments through Charlotte AI AgentWorks, NVIDIA Nemotron open models, NVIDIA NeMo Data Designer synthetic data, NVIDIA Nemo Agent Toolkit, and NVIDIA NIM microservices.

New NVIDIA products launched at the October 2025 GTC conference included the following:

• The NVIDIA BlueField-4 data processing unit is part of the full-stack BlueField platform that accelerates gigascale AI infrastructure, delivering massive computing performance, supporting 800 gigabytes/second of throughput and enabling high-performance inference processing.

• The NVIDIA AI Factory for Government reference design enables federal agencies and regulated industries to build and deploy new AI platforms and intelligent agents. It provides guidance for deploying full-stack AI infrastructure using the latest NVIDIA AI Enterprise software, meeting security standards for FedRAMP-authorized clouds and high-assurance environments.

• The new open-source AI technologies for language, robotics and biology are designed to broaden access to AI. These models, data, and tools are part of the NVIDIA Nemotron family for AI reasoning, the NVIDIA Cosmos platform for physical AI, NVIDIA Isaac GR00T for robotics and NVIDIA Clara for biomedical AI.

NVIDIA and Nokia Unite to Build the AI Platform for 6G

Extending his Washington, D.C. keynote vision from AI factories to national infrastructure, Huang announced that NVIDIA will invest $1 billion in Nokia and form a deep partnership to create the world’s first AI-RAN platform, a foundation for what he called “America’s return to telecommunications leadership.”

The agreement positions the two companies at the center of a generational redesign of wireless networks, moving from 5G Advanced toward AI-native 6G architectures built on accelerated computing.

“Telecommunications is a critical national infrastructure—the digital nervous system of our economy and security,” Huang said. “Built on NVIDIA CUDA and AI, AI-RAN will revolutionize telecommunications—a generational platform shift that empowers the United States to regain global leadership in this vital infrastructure technology.”

AI-Native Wireless

The partnership unites Nokia’s global RAN portfolio with NVIDIA’s accelerated-computing stack, introducing the new Aerial RAN Computer Pro (ARC-Pro)—a 6G-ready platform combining connectivity, computing, and sensing in one software-defined system. Nokia will embed ARC-Pro into its modular AirScale baseband architecture, enabling existing 5G deployments to evolve into AI-native networks through software upgrades rather than forklift replacements.

T-Mobile U.S. will serve as the first carrier collaborator, launching field trials in 2026 to validate performance and efficiency gains in real-world networks. Dell Technologies will provide the compute backbone with PowerEdge servers engineered for low-touch upgrades and edge scalability.

Distributed AI at the Edge

Analyst firm Omdia projects the AI-RAN market to exceed $200 billion by 2030, creating a new high-growth frontier for telecom providers that can host generative and agentic AI workloads alongside traditional radio functions. NVIDIA and Nokia envision base-station sites evolving into distributed AI grid factories, processing data where it is created and reducing latency for devices ranging from autonomous drones to mixed-reality headsets.

“The next leap in telecom isn’t just from 5G to 6G—it’s a fundamental redesign of the network to deliver AI-powered connectivity,” said Justin Hotard, Nokia president and CEO. “Our partnership with NVIDIA will accelerate AI-RAN innovation to put an AI data center into everyone’s pocket.”

The collaboration also extends into AI-networking solutions, combining Nokia’s SR Linux and telemetry fabric with NVIDIA Spectrum-X Ethernet switching for large-scale AI clusters. Future efforts will explore optical technologies within NVIDIA’s next-generation AI infrastructure, tightening the feedback loop between data-center and wireless ecosystems.

As Huang framed it, AI computing is no longer confined to server rooms—it is becoming the connective tissue of national infrastructure, and the Nokia partnership signals a deliberate U.S. pivot to build intelligent networks that learn and adapt in real time.

Samsung and NVIDIA Forge the World’s First Semiconductor AI Factory

Just two days after NVIDIA's GTC in D.C. event, at the APEC Summit in Seoul, Huang appeared beside Samsung Electronics executive chairman Jay Y. Lee to announce a project that turns the “AI factory” metaphor into reality.

The companies will build a semiconductor AI factory powered by 50,000 NVIDIA GPUs, uniting Samsung’s advanced manufacturing prowess with NVIDIA’s accelerated-computing platforms to create the world’s most intelligent chip-production environment.

“We are at the dawn of the AI industrial revolution—a new era that will redefine how the world designs, builds and manufactures,” said Huang.

“From Samsung’s DRAM for NVIDIA’s first graphics card in 1995 to our new AI factory, we are thrilled to continue our longstanding journey,” Lee added.

Manufacturing at the Speed of Simulation

Samsung will deploy NVIDIA Omniverse to build high-fidelity digital twins of its fabs, integrating real-time telemetry from process tools and logistics systems. These virtual environments will power predictive maintenance, operational optimization, and AI-driven decision-making, shortening time from design to full production.

To accelerate the most compute-intensive workloads, Samsung is incorporating NVIDIA cuLitho into its optical proximity correction (OPC) lithography platform, reporting 20× performance gains in computational lithography and TCAD simulations. EDA leaders Synopsys, Cadence, and Siemens are collaborating with both firms to advance GPU-accelerated chip-design workflows for the AI era.

On the hardware side, NVIDIA RTX PRO Servers with RTX PRO 6000 Blackwell Server Edition GPUs will operate as real-time logistics engines for fab scheduling, anomaly detection, and supply-chain optimization.

Toward Physical AI and Autonomous Robotics

Beyond semiconductors, Samsung’s AI factory doubles as an incubator for physical AI—robotic systems that perceive and act in the real world. Using NVIDIA Isaac Sim and Cosmos world foundation models within Omniverse, Samsung is developing humanoid and industrial robots running on the Jetson Thor edge AI platform.

The same collaboration extends into AI-RAN networks with Korean telecom partners, blending intelligent connectivity with intelligent machinery.

A Closing Loop for the AI Industrial Revolution

For NVIDIA, the Samsung AI factory completes the narrative Huang began in Washington: from data centers to wireless networks to manufacturing floors, accelerated computing now underpins the entire physical economy. If Nokia’s partnership defines the digital nervous system, Samsung’s factory represents the muscle: the tangible machinery of an AI-powered industrial age.

Together, the two announcements bookend GTC 2025’s central theme: AI is no longer merely a tool for the data center; it is in fact becoming the data center model for the world.

Pushing for U.S. Technology Leadership

The GTC conference was purposely located in Washington, D.C. because Huang has become a central figure in President Trump’s push for the U.S. to dominate AI. Many government officials and agency personnel were speakers and attendees.

“The first thing that President Trump asked me for is (to) bring manufacturing back, bring manufacturing back because it’s necessary for national security,” Huang said, adding the company’s AI Blackwell chips are now “in full production” in Arizona, although they will be packaged abroad.

Huang was set to meet with Trump in South Korea this week during the president’s visit for the Asia-Pacific Economic Cooperation Summit.

NVIDIA representatives said in August they were seeking clarity from the White House on how it could restart sales of its advanced AI chips to China, after agreeing earlier this year to pay the U.S. government 15% of its China revenues.

In his keynote address at this week’s GTC conference, Huang told the Trump administration that the U.S. must lead in AI while keeping China's developer ecosystem within reach. He warned that the push to isolate China from advanced AI chips could be a mistake and urged balance between U.S. leadership and global access.

“We want America to win this AI race. No doubt about that,” Huang stated, adding, “We want the world to be built on American tech stack. Absolutely the case.”

However, he said, “We also need to be in China to win their developers. A policy that causes America to lose half of the world’s AI developers is not beneficial long term; it hurts us more.”

At Data Center Frontier, we talk the industry talk and walk the industry walk. In that spirit, DCF Staff members may occasionally use AI tools to assist with content. Elements of this article were created with help from OpenAI's GPT5.

Keep pace with the fast-moving world of data centers and cloud computing by connecting with Data Center Frontier on LinkedIn, following us on X/Twitter and Facebook, as well as on BlueSky, and signing up for our weekly newsletters using the form below.

About the Author

Theresa Houck

Senior Editor

Theresa Houck, Senior Editor, is an award-winning B2B journalist with more than 35 years of experience. She writes about strategy, policy, and economic trends for EndeavorB2B on topics including data centers, cybersecurity, IT, OT, AI, manufacturing, industrial automation, energy, and more. With a master’s degree in communications from the University of Illinois Springfield, she previously served as Executive Editor for four magazines about sheet metal forming and fabricating at the Fabricators & Manufacturers Association, where she also oversaw circulation, marketing, and book publishing. Most recently, she was Executive Editor for the award-winning The Journal From Rockwell Automation publication on industrial automation where she also hosted and produced podcasts, videos and webinars; produced eHandbooks and newsletters; executed social media strategy; and more.

Matt Vincent

A B2B technology journalist and editor with more than two decades of experience, Matt Vincent is Editor in Chief of Data Center Frontier.