Navigating Liquid Cooling Architectures for Data Centers, Capacity and Form Factor Considerations for CDUs

Artificial Intelligence (AI) has been the talk of the town for many years, but recent demand for accelerated AI training and inference has led chip developers to push the boundaries of thermal design power (TDP) above and beyond “traditional” limits imposed by air cooling. Given the limits of air-cooling, the next generation servers will likely be liquid-cooled. This trend combined with continued pressure to improve efficiency and enhance sustainability is in turn forcing the data center industry to pivot and rethink how to best support these high-density loads. While air cooling has served the data center industry well for many years, attention is quickly turning to the integration of liquid cooling to provide support of these high-density loads.

Today there are two main categories of liquid cooling solutions for AI Servers – direct to chip and immersion. Both categories share similar elements related to the heat rejected from the IT space to the environment but differ in the approach of capturing heat generated at the server level. The method of heat capture at the server will largely define the category of solution ultimately selected.

From a high level, in a liquid-cooled solution, heat is absorbed and transferred from the server to a fluid. In the case of immersion cooling, this liquid would likely be a dielectric fluid that comes into direct contact with and circulates around the components of the server. In the case of direct-to-chip cooling, the working fluid could be a refrigerant, or a conditioned water/glycol mixture provided through cold plates attached directly to the CPUs GPUs or FPGAs. Immersion cooling solutions may support 100% of the servers cooling requirements, whereas direct-to-chip liquid cooling may still necessitate conditioned air to provide support for power supplies, hard drives, etc.

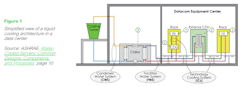

Figure 1 below provides a simplified view of a liquid cooling architecture, in which three separate cooling loops are developed. The first loop is the Technology Cooling System or TCS which is used to transfer heat from the IT assets to the Facilities Water System. The second loop is referenced as the Facilities Water System (FWS) which could be considered as the primary coolant loop within the data center. The third loop is the Condensed Water System (CWS) where heat is rejected from the Facilities Water System to the environment.

The CDU provides the boundary between the FWS and the TCS isolating the IT fluid loop from the rest of the cooling system. CDUs perform 5 key functions that are critical to support liquid cooling solutions:

- Heat Exchange and Isolation – The fundamental function of the CDU is to transfer heat from the TCS to the FWS and to isolate the working fluid in the TCS from the rest of the cooling system.

- Temperature Control – The CDU is required to tightly control loop temperatures within the TCS to ensure compliance with manufacturers' specifications. It's important to note that the CDU is also required to support an appropriate TCS loop temperature range even when the IT load is off.

- Pressure Control – The CDUs manage pressure in the TCS loop to keep it operating within its design limits and maintain the required differential pressure (delta P) for the necessary flow. TCS loops can be designed to operate under positive or negative pressure. Negative pressure systems pump water under a vacuum, reducing the risk of leaks but potentially increasing the complexity of the overall TCS loop design.

- Flow Control – CDUs must be capable of delivering adequate flow through the rack manifolds, connectors, and cold plates of each connected IT asset to transfer the heat generated from the CDU boundary to the FWS. Variations in flow rates or delays in response time will affect the performance of the connected IT asset or even the hardware's lifespan.

- Fluid Treatment – The filtration and chemistry of the TCS loop are critical and must be monitored closely.

Selection of the appropriate CDU must include consideration of two critical attributes – the type of heat exchanger desired and the CDU Capacity and form factor.

The types of heat exchangers available include:

- Liquid to Air (L-A) – Heat from the fluid in the TCS loop is transferred via a “radiator” directly to the conditioned data center air.

- Liquid to Liquid (L-L) - Heat from the fluid in the TCS loop is transferred to the FWS.

- Refrigerant to Air (R-A) – Two phase refrigerant rejects heat from cold plate directly to the conditioned data center air.

- Refrigerant to Liquid (R-L) Two phase refrigerant rejects heat from cold plate directly to the facility water system.

- Liquid to Refrigerant (L-R) Heat from the fluid in the TCS loop is rejected to the facility pumped refrigerant system.

As it relates to capacity and form factor, CDUs are available in a wide range of capacities and form factors. Capacity is determined by the size of the pump sets, fluid type, heat exchangers sizing, pressure, and flow requirements. CDUs are available in rack-mounted and free-standing configurations.

Rack-mounted CDUs are typically used to provide a TCS loop for a single rack in L-A or L-L configurations, with capacities ranging from 20kW through ~40kW.

Free-standing CDUs can be configured to provide the TCS loop for clusters of racks and are usually located near the liquid-cooled IT racks and/or immersion tanks.

Figure 2 below offers guidelines related to CDU Capacity and form factor.

Schneider Electric’s latest white paper addresses the growing need for liquid cooling systems to support AI workloads in servers. It discusses cooling options based on deployment scale, including existing or dedicated heat rejection systems. The paper offers terminology, architectures, and decision factors as a starting point for data center operators to develop their ecosystem. For answers to common liquid cooling questions, download the white paper, Navigating Liquid Cooling Architectures for Data Centers with AI Workloads and optimize your data center cooling strategy today.

About the Author

Vance Peterson

Vance Peterson is a Solution Architect at Schneider Electric, and an industry veteran with over 30 years’ experience with end users as well as OEM and Service Providers supporting Mission Critical Facilities and Data Centers. Connect with Vance on LinkedIn.

Schneider Electric's purpose is to create Impact by empowering all to make the most of our energy and resources, bridging progress and sustainability. At Schneider, we call this Life Is On. We are a global industrial technology leader bringing world-leading expertise in electrification, automation and digitization to smart industries, resilient infrastructure, future-proof data centers, intelligent buildings, and intuitive homes. Anchored by our deep domain expertise, we provide integrated end-to-end lifecycle AI enabled Industrial IoT solutions with connected products, automation, software and services, delivering digital twins to enable profitable growth for our customers.