Why Liquid Cooling Is No Longer Optional for AI-Driven Data Centers

This launches our article series on the current state of cooling in data centers, how organizations are adapting their cooling strategies, and why liquid cooling is no longer a “nice to have” but a necessity.

This launches our article series on the current state of cooling in data centers, how organizations are adapting their cooling strategies, and why liquid cooling is no longer a “nice to have” but a necessity.

If you’ve been paying even the slightest attention, you’ve seen it — the data center industry is transforming, unlike anything else. The surge of AI, high-performance computing (HPC), and next- gen workloads isn’t just pushing boundaries; it’s obliterating them.

We no longer live in an era where traditional “pizza box” machines and email servers define infrastructure needs. Today’s workloads are heavier, denser, and more power-hungry than ever. AI training, cognitive systems, and real-time analytics demand unprecedented levels of performance. And here’s the reality check: Air cooling, as we know it, is tapped out.

Air cooling has been the backbone of data center thermal management for decades. But the heat loads we’re dealing with today? They’re simply beyond what air can handle efficiently alone. Enter liquid cooling — the only viable path forward for data centers aiming to support AI, HPC, and high-density workloads at scale.

Industry leaders aren’t just experimenting with liquid cooling — they’re embracing hybrid solutions that integrate liquid technologies where they matter most. This shift isn’t just about keeping servers cool; it’s about enabling the next generation of computing.

This article series examines the current state of cooling in data centers, how organizations are adapting their cooling strategies, and why liquid cooling is no longer a “nice to have” but a necessity. We’ll discuss real-world deployments, the rapid advancements in liquid cooling technology, and how data center leaders are future- proofing their infrastructure to meet the demands of AI-driven workloads.

Because in this new era of density and compute power, survival belongs to the cool — literally.

Introduction

The data center landscape is evolving at an unprecedented pace. The convergence of artificial intelligence (AI), high-performance computing (HPC), and growing digital infrastructure demands is reshaping how data centers are designed, powered, and cooled. These changes are not incremental but structural, challenging conventional approaches to efficiency and sustainability.

Among the most pressing concerns is cooling. As computational density rises, traditional air-cooling methods are approaching their physical limits. In 2023, global data center power consumption reached 7.4 GW, a 55% increase from the previous year. This trend is expected to continue as AI workloads push power and cooling requirements far beyond what legacy infrastructure was built to support. A recent report from the DoE finds that data centers consumed about 4.4% of total U.S. electricity in 2023 and are expected to consume approximately 6.7% to 12% of total U.S. electricity by 2028.

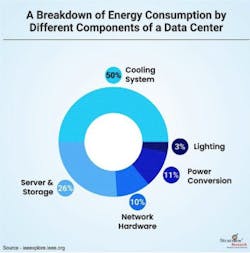

Cooling already accounts for 50% of total power usage in data centers, up from 43% a decade ago.

As servers begin to go well beyond 50 kW per rack, air-based cooling methods struggle to dissipate heat efficiently. Consider this as you examine rack density: The Nvidia GB200 NVL72 system is already pushing rack density averages up to 120 kW. With GB300, rack density is slated to go even higher. The challenge is no longer about optimizing existing cooling strategies; it is about rethinking them entirely.

Liquid Cooling: Addressing the Limitations of Air-Based Systems

Liquid cooling has emerged as a necessary evolution in data center thermal management. Unlike air cooling, which relies on moving large volumes of air to remove heat, liquid cooling directly transfers heat away from components through liquid-to-air or direct-to-chip systems. This method is significantly more effective for high-density workloads, particularly in AI and HPC environments.

Real-world implementations highlight the scalability and efficiency of liquid cooling solutions. We will explore this further toward the end of the paper. Specifically, at the Start Campus facility in Portugal, a 6.8 MW data hall integrates 12 EcoCore COOL Cooling Distribution Units (CDUs), each providing 1 MW of cooling capacity. Today, the CDUs are doing 800 kW each at their operating points, well below the max capacity of 4,000 kW. This liquid-to-air cooling approach enables efficient heat dissipation at the rack level, demonstrating the viability of liquid cooling in large-scale deployments.

The rapid expansion of high-density computing has pushed traditional air-cooling methods to their limits, making efficient thermal management a critical challenge for data centers. As AI, HPC, and cloud workloads scale, liquid cooling has become a necessary evolution, offering superior heat dissipation and energy efficiency. Advances in CDUs, liquid-to-air systems, and rear-door heat exchangers have made liquid cooling more practical and scalable.

Download the full report, Survival of the Coolest: Why Liquid Cooling is No Longer Optional for HPC and AI-Driven Data Centers, featuring Nautilus Data Technologies, to learn more. In our next article, we’ll examine how liquid cooling became an imperative so quickly.

About the Author