Liquid Cooling as an Imperative: How Did We Get Here So Fast?

Last week, we launched our article series on the current state of cooling in data centers, how organizations are adapting their cooling strategies, and why liquid cooling is no longer a “nice to have” but a necessity. This week, we’ll examine how liquid cooling became an imperative so quickly.

A common misconception in the digital infrastructure industry is that liquid cooling is a recent innovation. This technology has a long and well-established history, proving its effectiveness across multiple generations of computing. Liquid cooling has been battle-tested for over five decades, evolving from its early use in mainframes (1970–1995) to high-performance gaming and custom-built PCs in the 1990s.

By the early 2000s, data centers began incorporating chilled rear-door heat exchangers, paving the way for widespread adoption in HPC, direct-to-chip cooling, and immersion systems from 2010 onward. As computing power and density rise, liquid cooling is not just an option — it is the logical next step in thermal management for modern data centers.

Redefining Data Center Cooling: The Impact of AI

Within the data center, evolving modernization strategies and emerging requirements driven by AI are reshaping the design and construction of next- generation digital infrastructure. Several key trends underscore this transformation:

- Adopting Data Hall-Scale Architecture. We’ve reached an era in data center design where we must think about massive-scale capabilities to support emerging use cases. Data hall-scale refers to the computing equipment’s capacity and density in a data center hall. Preparing for data hall-scale involves designing infrastructure that seamlessly accommodates increasing power densities and thermal loads associated with advanced workloads like AI and HPC. Liquid cooling significantly impacts data hall-scale by providing efficient heat dissipation, enabling higher density rack configurations (exceeding 50 kW per rack) while reducing the data center’s overall footprint and operational complexity. We must look at liquid cooling to get to that data hall-scale level. However, let’s look at liquid cooling through a more familiar lens: power distribution.

- Liquid Cooling Distribution: The Power Parallel. Understanding liquid cooling distribution becomes easier when comparing it to data center power distribution systems. Just as electrical power is managed via structured pathways (busbars, transformers, and power distribution units), liquid cooling systems similarly rely on clearly defined components — CDUs, hydronic loops, heat exchangers, pumps, and valve systems. Nautilus’s EcoCore COOL CDU exemplifies this structured approach, mirroring power distribution systems’ redundancy and scalability, including features like parallel distributed redundancy, intelligent controls, and integrated flow management to optimize operational efficiency and reliability. These technologies aren’t a “nice to have” any longer. They’re imperative because of the next bullet point.

- What Changed in the Workload? The workloads running in modern data centers have dramatically shifted from traditional enterprise applications such as email servers and SQL databases to highly demanding generative AI (GenAI) and HPC workloads. Further, we’re seeing greater transitions into inference-based workloads, requiring GPU and high-density gear. This transition is pivotal because GenAI workloads significantly increase computational intensity, driving power and cooling demands far beyond legacy capabilities. This has necessitated an infrastructure capable of handling higher thermal densities, precision cooling, and immediate scalability.

- How can data centers adapt? This part does not have to be scary or even overtly challenging. Adapting to the demands of liquid cooling requires data centers to implement new data hall-scale solutions designed explicitly for high-density workloads. Modern data halls, like the Start Campus facility, integrate solutions such as Nautilus’s EcoCore COOL CDU systems, customized hydronic loop distribution, and modular liquid-to-air cooling systems directly at the rack level. These innovations enable data centers to handle the thermal loads of modern AI workloads efficiently and reliably, ensuring support for evolving business use cases that demand high computational intensity and swift scalability. We’ll touch on this a bit further, but this paper is not asking you to rip out your existing airflow ecosystem. Hybrid environments provide for powerful data hall- scale solutions.

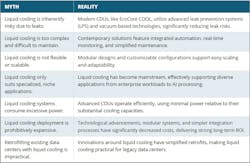

Legacy Liquid Cooling Myths

Despite significant advancements, several misconceptions persist about liquid cooling technology. In fact, we could have had an entire section dedicated only to liquid cooling myths, concerns, and misconceptions. Addressing these myths is crucial for organizations exploring more efficient, scalable, and resilient cooling solutions. Modern liquid cooling systems have evolved significantly, making them not only viable but essential for today’s data center challenges. While there are others, these are some of the top myths that have already been overcome using modern innovations.

Given these developments, organizations should actively explore liquid cooling solutions. Technologies such as rear-door heat exchangers have become notably easier to deploy, offering compelling efficiency gains, improved performance, and significant operational savings.

With all of this in mind, let’s explore some of these advancements in liquid cooling architecture and how they already impact modern data centers.

Breaking New Ground: Advancements in Liquid Cooling Architecture

By the late 1990s and early 2000s, data center operators were already questioning whether air cooling could keep up with increasingly power-hungry servers. With rack densities surpassing 5 kW per cabinet, many predicted a shift toward rear-door heat exchangers and in-row cooling to manage rising heat loads. Yet, for decades, raised floor cooling systems remained the industry standard, relying on CRAC and CRAH units to pressurize underfloor air, which was then pushed through perforated tiles to cool server intakes.

While effective for traditional workloads, this approach has inherent inefficiencies, particularly as hot and cold air often mix, reducing cooling performance. Today, with AI, HPC, and generative AI workloads demanding far greater densities, the question is clear: Can legacy air-cooling systems adapt, or is it time for a fundamental shift in data center cooling strategy?

How Low Can You Go?

Cooling servers might seem simple — remove heat to prevent overheating. But in reality, effective thermal management is far more complex, especially in today’s high-density, high-performance environments. When a server runs too hot, built-in safeguards will throttle performance or shut it down entirely to prevent damage. And let’s be clear: When your AI training cluster or analytics engine suddenly powers down mid-process, it’s not just an inconvenience. It’s lost efficiency, wasted power, and potential downtime that impact business outcomes.

But heat isn’t the only enemy. HPC, AI, and data- intensive workloads rely on incredibly sensitive hardware that’s vulnerable to more than just temperature fluctuations. Particle contamination — whether from dust, airborne debris, or even gaseous pollutants — can degrade components over time. In certain conditions, corrosive gases can accelerate failure rates in circuits and chips, turning an infrastructure investment into a maintenance nightmare.

So, what’s the takeaway? Traditional air-cooled systems have served data centers well, and they still have a place. But modern workloads — especially those pushing power densities of 50 kW per rack or more — demand a different approach. As compute requirements evolve, so too must the cooling strategies that keep them running.

This isn’t just an upgrade. It’s a fundamental shift in how we engineer infrastructure for the next generation of computing.

Time to Take a Dip: Liquid Cooling to the Rescue!

The future is heating up — literally. AI-driven workloads, dense GPU deployments, and HPC environments are rapidly pushing traditional air cooling to its limits. Liquid cooling has emerged as more than just a viable alternative; it’s now essential for managing these unprecedented thermal demands. It’s time to explore how modern liquid cooling technologies like direct-to- chip liquid cooling (DLC) and rear-door heat exchangers (RDx) are reshaping data center cooling strategies.

Liquid Cooling Innovations: DLC and Beyond

Direct-to-chip liquid cooling (DLC) has become increasingly vital, offering precision cooling directly to high-performance processors and GPUs. This approach delivers substantial advantages:

- Enhanced Efficiency: DLC systems cool processors directly at the source, significantly improving heat transfer efficiency compared to air-based solutions.

- High Density Support: DLC enables efficient thermal management of extreme-density racks, easily handling power densities of 50 kW per rack and higher.

- Compact and Modular Designs: Modern DLC solutions feature modular, quick-connect components, simplifying installations and scaling seamlessly with evolving workloads.

- Advanced Water Distribution: Innovations in coolant distribution include compact, efficient pumps, intelligent flow controls, and integrated monitoring systems, enhancing both reliability and energy efficiency.

While DLC addresses heat directly at critical chip-level components, rear-door heat exchangers (RDx) remain valuable, particularly in hybrid cooling environments:

- Supplemental Cooling: RDx units effectively manage residual heat at the rack level, complementing DLC by reducing ambient temperatures within the data hall.

- Ease of Integration: Modern RDx systems feature streamlined, modular designs, enabling rapid deployment and easy maintenance alongside DLC solutions.

- Hybrid Cooling Architecture: Combining DLC and RDx technologies allows for effective cooling of both high-density and legacy equipment, ensuring comprehensive thermal management across diverse infrastructure.

Download the full report, Survival of the Coolest: Why Liquid Cooling is No Longer Optional for HPC and AI-Driven Data Centers, featuring Nautilus Data Technologies, for exclusive content on how to understand the challenges in air cooling specific workloads. In our next article, we’ll explore the liquid cooling designs making an impact today.

About the Author