A recent study by BestBrokers identified that, over a year, the total energy consumption of ChatGPT, a Gen AI chatbot, was 454 million kWh, enough to handle 156 billion prompts. When one compares this data with the latest data from the Energy Information Administration (EIA), 24 countries consume less energy annually than ChatGPT – including Dominica, Haiti, and St. Lucia. ChatGPT utilizes advanced chips to process these queries, which, in turn, convert the input electrical energy almost entirely into heat as a byproduct. For the chips to reliably perform high-performance computations, they must be maintained at a consistent nominal temperature. To maintain these temperatures and maximize system uptime, data centers employ various cooling techniques.

Traditionally, data centers have relied on air cooling. Air cooling worked well until recently, when the industry hit an inflection point with chip, memory, and drive component wattages, driving temperatures for high-performance chips higher than air cooling could handle. As air cooling struggles to keep up with the increasing demand at a processor level, data center teams are moving towards liquid cooling, where chips are cooled directly via a cold plate or immersion.

Direct-to-chip (DTC) cooling technology utilizes a liquid-cooled cold plate placed directly on the surface of heat-generating processing chips to absorb and dissipate heat efficiently. This keeps chips at an optimal temperature and enhances overall system performance.

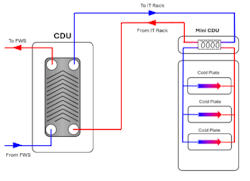

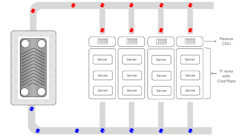

As shown in the image below, the typical architecture of direct-to-chip cooling involves three main components: coolant distribution units (CDUs), manifolds, and cold plates. CDUs serve as an intermediary heat transfer interface between two hydraulic loops, transferring thermal energy from the chips to the atmosphere. Direct-to-chip cooling removes heat from the cold plate through either a single-phase or two-phase process. This heat is transferred through various CDUs until it is dissipated into the facility's water systems.

In a single-phase direct-to-chip system, the cooling fluid absorbs the heat and flows through the CDUs, while in two-phase systems, the coolant evaporates while maintaining a constant temperature. As silicon heat dissipation is on the rise, two-phase systems could be a better choice because the latent heat of vaporization is higher than the specific heat capacity.

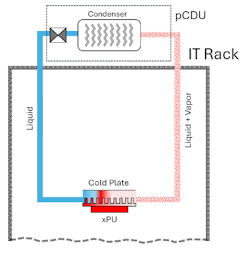

An active single-phase CDU, when combined in a hybrid manner with a passive CDU (pCDU), can bridge the gap between HPC chips and facility water systems. A pCDU is integrated with IT server racks and employs low global warming potential (GWP) refrigerants to transfer heat via evaporation. Heat is dissipated from the chips and conducted to the cold plate through a thermal interface material, where a refrigerant is partially evaporated. This liquid vapor mixture leverages the balance between the buoyancy and gravity forces (through a process called thermal siphoning) to transfer heat to the pCDU. The pCDU acts as a condenser, where the two-phase mixture is condensed into a single phase before reaching the cold plate again. While the refrigerant continues through the cold plate cycle, the heat rejected from the condenser is transferred in liquid form via manifolds to an active CDU, such as an in-row CDU. The in-row CDU, typically a liquid-to-liquid heat exchanger, further transfers the heat to the facility's water systems.

For many data centers, liquid cooling offers better performance while reducing energy usage. However, better liquid cooling processes are key to sustainable deployment. The right combination of single- and two-phase systems can give data centers a long-term edge, reducing PUE and total cost of ownership throughout the lifetime of the IT equipment. Leveraging row-level CDUs, along with passive CDUs with a two-phase system, the architecture provides a flexible solution for data centers to support next-generation IT equipment.

About the Author