Taking the Compromise Out of Buy vs. Build Calculus

The first time an end user told me they’d self-build 100% of the time if they could, my instinct was to be insulted. I had, after all, spent more than two decades developing third-party capacity for end users.

But it made sense. By self-performing, end users keep control. Leasing from a third-party, they get capacity on a shorter timeframe than self-build, yes, but they often must give up control or compromise on design, operations, and ownership. (Control over design is in fact a key driver of hyperscalers’ choices to self-build.)

That day I realized: End users’ preference for self-performance wasn’t an insult, but an invitation to provide third-party capacity that feels like it’s self-performed, providing timing flexibility plus design, operations, and ownership optionality. Delivering the best of both these worlds means taking the compromise out of customers’ buy versus build decision — and being the best partner a hyperscale could hope for.

The cone of uncertainty: Why end users who prefer to self-build still lease third-party capacity

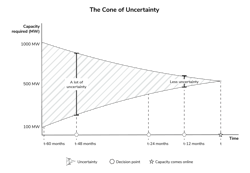

Most hyperscalers will self-build capacity for the business-as-usual demand they’re fairly certain of. But for demand that’s uncertain or a result of an unexpected uptick in requirements — the kind driven by the introduction of AI and the ensuing AI arms race — they tend to lean on third-party providers. This is because it’s impossible to have perfect visibility into when and how much capacity will be needed at the start of a 4- or 5-year internal build cycle.

The longer the forecast horizon, the more uncertainty there is, and the more uncertainty there is, the riskier it is to make decisions about capacity 4-5 years in advance.

Managing standard development timelines in a quickly changing market makes being absolutely right nearly impossible, and being wrong on self-build decisions is risky. The challenge is matching the size and timing of investment to the size and timing of the revenue — to spend the right amount of money at the right time to maximize revenue.

Overestimating how much capacity will be needed at any given time leads to a premature deployment of capital that could have a material impact on the business’s bottom line. Underestimating may be even worse, as it could mean getting left behind on the next major technological shift. As Meta CEO Mark Zuckerberg explained: “If superintelligence is possible in three years but you built [your infrastructure] out assuming it would be there in five years, then you’re out of position on what I think is going to be the most important technology in history.”

In times like these, the longer end users can wait before committing to a set amount of capacity at a set date, the less risky their decisions become.

Hyperscalers are among the smartest, most sophisticated companies in the world; the fault is not in their forecasting, but in the unprecedented scale and pace of technology change. (We’ve all seen how the volume of capacity an end user needs at a given time can quickly and dramatically change.) To meet this level of dynamism, even companies with the best construction teams in the world are augmenting with third-party capacity so they can make decisions 12-24 months in advance instead of 4-5 years ahead.

In this market, third-party capacity is a vital tool

As AI continues to evolve, more step-change increases in demand are likely in the future. Predicting capacity demand on the typical self-build ramp of 4-5 years will remain incredibly challenging. Demand will be huge, but how huge? And when will it arise — in two years, or four? What will the associated design specifications be?

The answers depend on factors like whether some company figures out a way to train AI models with a fraction of the resources — and on how close the next phase of AI models really are to being real, the actual slope of the AI adoption curve, on how soon deployments will include GPUs designed for densities of 1 MW per rack, etc. It also depends on the business decisions of each hyperscaler, which can dramatically change the volume of capacity needed, and on how a shift from model training to inference changes design specs and capacity needs.

In other words, accurately predicting future demand for data center capacity depends on questions one would need a crystal ball to answer.

In the absence of a crystal ball, end users will continue to turn to third-party capacity for the demand that’s most uncertain. But even then, well over 90% of the third-party capacity coming online is pre-leased, and most third-party providers have a 24-month ramp for new capacity.

Delivering third-party capacity with levels of comfort and control that feel like internal

Third-party data center providers are handling evolving hyperscale requirements in different ways. Stream’s approach is to provide customers capacity with time-to-market under 24 months and with additional timing flexibility. Added wiggle room, plus optionality around design, operations, and ownership, allows customers to better align expenditures with revenues so that the capacity development feels and operates like their internal facilities. (This customer even went on record, stating that working with Stream was about 90% similar to working with their own internal delivery teams — in this market landscape, that’s maybe the best praise any of us developers can get.)

Here's how we built a development discipline that delivers more timing flexibility and more internal-like optionality:

Access to capital

We have access to the capital required to significantly scale our developments at the rate hyperscale customers demand — without sacrificing an approach rooted in collaboration and agility.

Expert collaborators

Having team members from the hyperscale space is critical. If you want to make the facility feel like ‘home’, you must know what home is like for customers, understanding the challenges and opportunities they face and how to manage them. Our team is focused on knowing not only what makes a site viable for hyperscale development or how a hyperscale data center is designed, but also how the business functions, and the pressures on data center teams to deliver for the business.

Of course, technology is constantly changing, so a culture of collaboration is essential too. That’s why we’re not just taking what we know about hyperscale development and operations today and applying it; we’re continuously learning and adapting alongside our customers. It’s a collaborative, not prescriptive, approach.

Our supply chain discipline

Our customers don’t have to give up customization and control in exchange for timing flexibility. That timing flexibility comes in part from our standardized-but-configurable MEP package — a kit of parts that can be deployed anywhere, in a range of form factors to best benefit the end user.

Our supply chain discipline also enables flexibility in what the deployment looks like. A perfect example of this is our configurable, modular hybrid cooling solution, which enables customers to late-bind density decisions without impacting time to market. Standardized-but-configurable means we adapt to customers’ own solutions too. For instance, if customers have an in-row or in-rack direct liquid cooling solution and want Stream to only deploy a specific amount of air, we can dial that in.

By enabling end users to make capacity decisions when they have more certainty about demand and at the same time giving them control over design, operations, and ownership, a developer can change the age-old build-versus-buy calculus to work with hyperscale realities instead of trying to work around them.

As developers, this is an opportunity to truly collaborate with our customers to deliver predictable, programmatic capacity in a manner that better reflects their self-build requirements without losing the benefits that third-party development can offer. By creating a system that blends the comfort and control of self-built with the risk and scale advantages of third-party without requiring too much compromise, our customers can feel right at home.

About the Author

Chris Bair

Chris Bair brings 25+ years of experience to the data center industry, and as CCO and Partner, helps Stream's team earn and maintain trust with consistent, exceptional data center experiences.

Stream Data Centers, a time-tested hyperscale partner and one of the longest-standing developers in the industry, has built and operated data centers for the largest and most sophisticated users since 1999. Today, SDC has 90% of its capacity leased to the Fortune 100 across 10+ national markets, with more under development.