Intel DCM: Whereby Dog Food Becomes a Home-Cooked Meal

In this week’s Voice of the Industry, Jeff Klaus, Intel’s GM of Data Center Solutions benefits of ‘dogfooding,’ and how it is often the best method to improve a product’s quality and usability.

JEFF KLAUS, Intel

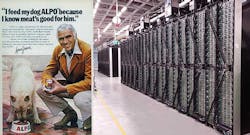

The popular television actor, with his venerable presence and naturally rugged good looks, was effortlessly persuasive. In order to entice consumers to have confidence in their products, Alpo’s TV commercial and print campaigns emphasized that its spokespersons used the very products they advertised. Against a pastoral backdrop enters Lorne Greene, the consummate patriarch attired in western garb, who looks straight into the camera and drawls, “When it comes to feeding my own dog, I know there isn’t a better dog food than Alpo.”

Sales spiked, and the concept of “eating your own dog food,” or dogfooding, was born.

Examples of companies engaging in dogfooding, even in support of products whose main ingredient isn’t real beef, such as technology, abound. In 1980, the president of Apple Computer, Inc., as it was then known, drafted a company-wide memo to ensure the eradication of all typewriters across its offices. “We believe the typewriter is obsolete,” Michael Scott wrote. “Let’s prove it inside before we try and convince our customers.”

In 1999, Hewlett-Packard’s office staff referred to a project that required using HP’s own products as “Project Alpo.”

Capturing Benefits in Usability

Encouraging those who design products to actually use and rely on them is often thought to improve usability, which in software engineering is the degree to which an operating system, program or application can be used by specified customers to achieve quantified objectives with effectiveness, efficiency and satisfaction in a defined context of use. When you consider that venture capital funding within the software industry increased 37 percent in 2015 to a record $24.5 billion, it’s safe to say the software market is the fastest growing vertical in the tech industry. Yet of the thousands of software companies that exist, many don’t understand the benefits from leveraging their own solution, including catching bugs in the system and ensuring the UX feels natural. The benefits of dogfooding are plenty, and often the best solutions to internal problems are those that are developed in-house.

And, by the way, if you find the term dogfooding unappealing, we can use the alternate phrases “drinking our own champagne,” as Pegasystems, a developer of customer relationship and business process management software did; “icecreaming,” as Microsoft once preferred; or “eating our own cooking,” which the developers of IBM’s mainframe operating systems have long used.

But libationary or gastronomic metaphors aside, why wouldn’t a technology company deploy its own solution across its enterprise environment in order to determine whether it performed as advertised?

Standardizing to Reduce Energy Footprint

As detailed in a recent white paper, Intel IT enlisted its own Intel Data Center Manager (Intel DCM) to standardize operations of its 60 global data centers and improve its corporate green initiatives. Given recent increases in data and energy costs, the company was looking for a solution that could manage complex data center infrastructures, help reduce costs, and is easy to deploy and use. Intel DCM is a standalone solution that provides accurate, real-time power and thermal monitoring and management of individual servers, groups of servers, racks, and other IT equipment, such as Power Distribution Units (PDUs). Intel DCM provides benefits for both IT and facility administrators, enabling these groups to collaborate to reduce a data center’s energy footprint.

In its initial use of Intel DCM, Intel IT considered the solution to be focused primarily on gaining a better understanding of the power consumption and thermal status of servers. With broad deployment, it became apparent that Intel DCM is capable of much more.

The analysis covered not only servers and racks from different OEMs, but also storage, networks and facilities equipment. Data center managers were able to detect hotspots and cooling anomalies, and find “zombie” or underused servers. Servers use 50 percent of a data center’s power, even when those servers are idle. Because Intel DCM was easy to implement, integrate, administer and operate, this solution provided significant ROI in a short amount of time.

The Value of Real-Time Data

Delving further into its success, Intel DCM’s real-time data from a large sample of servers enabled data center managers to conduct more accurate and proactive capacity planning, while aggregating server-inlet temperature data into thermal maps allowed them to check the effectiveness of their cooling solutions and airflow design. Intel DCM also provided predictive thermal analysis, which in several cases helped to eliminate thermal risks and identify hotspots and thermal inefficiencies that provided our data center managers with enough information to change the design of problematic rooms to increase energy efficiency.

Finally, Intel DCM provided real-time power and thermal data to empower administrators to make better decisions about load balancing and right-sizing the data center’s environment. They were also able to create user-defined alarms that warn of potential circuit overloads before any actual failures or service disruptions occur. Intel IT estimates that this type of data will help reduce overall data center energy consumption while improving operational efficiency.

Now that’s dog food that rises to the level of champagne, ice cream and a nutritious, home-cooked meal.

Submitted by Jeff Klaus, GM of Data Center Solutions at Intel. You can follow Jeff on Twitter @jsklaus