GenAI Pushes Cloud to $119B Quarter as AI Networking Race Intensifies

Key Highlights

- Generative AI has become the primary driver of cloud market growth, with a 30% year-over-year increase in Q4 2024, the fastest in over three years.

- Major cloud providers like Amazon, Microsoft, and Google maintain dominant market shares, while AI-native providers such as CoreWeave and OpenAI are rapidly expanding their cloud revenues.

- Data movement and network fabric efficiency are now central to AI infrastructure, prompting vendors like Cisco to develop high-throughput switches and liquid cooling solutions.

- The shift toward gigawatt-scale AI clusters necessitates innovations in network architecture, with Ethernet data center switches expected to surpass $100 billion annually.

- AI infrastructure is evolving into a system where compute, cooling, and networking must advance together, moving beyond traditional cloud competition to a focus on physical and architectural data center layers.

The cloud market is no longer just expanding: it is accelerating under the weight of generative AI.

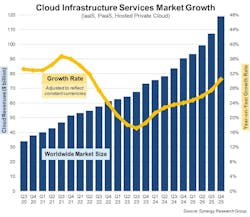

New data from Synergy Research Group shows enterprise spending on cloud infrastructure services reached $119.1 billion in Q4, up nearly $12 billion sequentially and $29 billion year over year. For full-year 2025, the market climbed to $419 billion, with growth accelerating for the ninth consecutive quarter.

After adjusting for currency effects, Synergy estimates the market expanded roughly 30% year over year in Q4, its fastest growth rate in more than three years and the ninth consecutive quarter of accelerating demand.

Growth at this scale has not been seen since early 2022, when the market was roughly half its current size. The difference now, analysts say, is clear: generative AI has become the dominant demand engine.

At the same time, infrastructure vendors are moving aggressively to remove what many operators now see as the next bottleneck: the AI network fabric itself.

GenAI Puts the Cloud Market Into Overdrive

Synergy’s latest data underscores how profoundly AI workloads are reshaping cloud economics.

Public IaaS and PaaS [Platform as a Service] offerings, the primary engines for AI training and inference, grew 34% year over year in Q4, continuing a steady acceleration that began in early 2024. The top three providers remain firmly in control of the market, with Amazon holding 28% share, followed by Microsoft at 21% and Google at 14%.

But the most telling signals are coming from the market’s second tier.

AI-focused providers including CoreWeave, OpenAI, Oracle, Crusoe, and Nebius are posting some of the fastest growth rates in the industry. In a particularly notable milestone, Synergy reports that CoreWeave, virtually absent from the rankings two years ago, is now generating more than $1.5 billion in quarterly cloud revenue, placing it among the top ten global providers.

John Dinsdale, Chief Analyst at Synergy Research Group, said the latest numbers reflect a structural shift in demand.

“GenAI has simply put the cloud market into overdrive,” Dinsdale said. “AI-specific services account for much of the growth since 2022, but AI technology has also enhanced the broader portfolio of cloud services, driving revenue growth across the board.”

The data suggests AI-native providers are no longer statistical noise in cloud growth. They are becoming meaningful contributors to incremental demand, even as hyperscalers continue to dominate overall market share.

The Next Constraint: Moving the Data

As AI clusters scale from tens of megawatts toward gigawatt-class campuses, operators are rediscovering a fundamental reality: compute without data movement is stranded capital.

Training and inference workloads generate massive east-west traffic patterns that place unprecedented stress on data center fabrics. In high-density GPU environments, even small inefficiencies in packet handling, congestion control, or load balancing can translate directly into longer job completion times and lower GPU utilization.

That dynamic is increasingly shaping infrastructure roadmaps across the industry.

Cisco’s latest product announcements, unveiled at Cisco Live EMEA, offer a clear window into how vendors are responding.

Cisco Targets the AI Fabric Bottleneck

Cisco introduced its Silicon One G300, a new switching ASIC delivering 102.4 Tbps of throughput and designed specifically for large-scale AI cluster deployments. The chip will power next-generation Cisco Nexus 9000 and 8000 systems aimed at hyperscalers, neocloud providers, sovereign cloud operators, and enterprises building AI infrastructure.

The company is positioning the platform around a simple premise: at AI-factory scale, the network becomes part of the compute plane.

According to Cisco, the G300 architecture enables:

-

33% higher network utilization

-

28% reduction in AI job completion time

-

Support for emerging 1.6T Ethernet environments

-

Integrated telemetry and path-based load balancing

Martin Lund, EVP of Cisco’s Common Hardware Group, emphasized the growing centrality of data movement.

“As AI training and inference continues to scale, data movement is the key to efficient AI compute; the network becomes part of the compute itself,” Lund said.

The new systems also reflect another emerging trend in AI infrastructure: the spread of liquid cooling beyond servers and into the networking layer. Cisco says its fully liquid-cooled switch designs can deliver nearly 70% energy efficiency improvement compared with prior approaches, while new 800G linear pluggable optics aim to reduce optical power consumption by up to 50%.

Ethernet’s Next Big Test

Industry analysts increasingly view AI networking as one of the most consequential battlegrounds in the current infrastructure cycle.

Alan Weckel, founder of 650 Group, noted that backend AI networks are rapidly moving toward 1.6T architectures, a shift that could push the Ethernet data center switch market above $100 billion annually.

SemiAnalysis founder Dylan Patel was even more direct in framing the stakes.

“Networking has been the fundamental constraint to scaling AI,” Patel said. “At this scale, networking directly determines how much AI compute can actually be utilized.”

That reality is driving intense innovation across silicon, optics, and fabric software, particularly as AI deployments expand beyond hyperscalers into enterprises and sovereign environments.

From Hyperscale to Everywhere AI

Cisco’s messaging makes clear that the company sees the next wave of AI infrastructure broadening well beyond the largest cloud providers. Enhancements to its Nexus One platform, including unified fabric management and AI job observability tied to GPU telemetry, are aimed squarely at organizations trying to operationalize AI outside hyperscale environments.

This aligns closely with what Synergy’s data is already showing: while the hyperscalers remain the scale anchor, the marginal growth in AI infrastructure demand is increasingly distributed across neoclouds, service providers, and enterprises.

For data center operators, the implications are significant.

AI infrastructure is evolving into a tightly coupled system in which compute density, power delivery, cooling architecture, and network fabric efficiency must advance in lockstep. Improvements in GPU performance alone are no longer sufficient to guarantee workload efficiency or economic returns.

Infrastructure Enters Its Systems Era

A decade ago, cloud competition was largely about regions, pricing models, and virtualization efficiency. Today, the battleground has shifted deep into the physical and architectural layers of the data center.

The latest Synergy numbers confirm that GenAI demand is still accelerating cloud consumption at a historic pace. But Cisco’s G300 launch highlights the parallel reality emerging inside AI facilities: the race is now on to ensure the network fabric can keep up with the compute explosion.

As AI clusters push toward ever larger and denser deployments, the winners in the next phase of the market may be determined less by who can deploy the most GPUs and more by who can keep them fully fed.

For an industry entering the gigawatt era, the network is no longer just connective tissue. It is becoming core infrastructure.

At Data Center Frontier, we talk the industry talk and walk the industry walk. In that spirit, DCF Staff members may occasionally use AI tools to assist with content. Elements of this article were created with help from OpenAI's GPT5.

Keep pace with the fast-moving world of data centers and cloud computing by connecting with Data Center Frontier on LinkedIn, following us on X/Twitter and Facebook, as well as on BlueSky, and signing up for our weekly newsletters using the form below.

About the Author

Matt Vincent

A B2B technology journalist and editor with more than two decades of experience, Matt Vincent is Editor in Chief of Data Center Frontier.